I have participated in fews technical interviews and have discussed with people topics around data engineering and things they have done in the past. Most of them are familiar with Apache Spark, obviously, one of the most adopted frameworks for big data processing. What I have been asked and what I often ask them is simple concepts around RDD, Dataframe, and Dataset and the differences between them. It sounds quite fundamental, right? Not really. If we have more closer look at them, there are lots of interesting things that can help us understand and choose which is the best suited for our project.

11 posts tagged with "Bigdata"

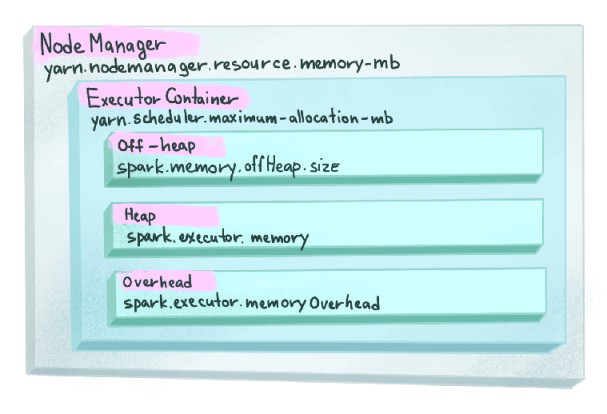

View All TagsHow Is Memory Managed In Spark?

Spark is an in-memory data processing framework that can quickly perform processing tasks on very large data sets, and can also distribute tasks across multiple computers. Spark applications are memory heavy, hence, it is obvious that memory management plays a very important role in the whole system.

Authorize Spark 3 SQL With Apache Ranger Part 2 - Integrate Spark SQL With Ranger

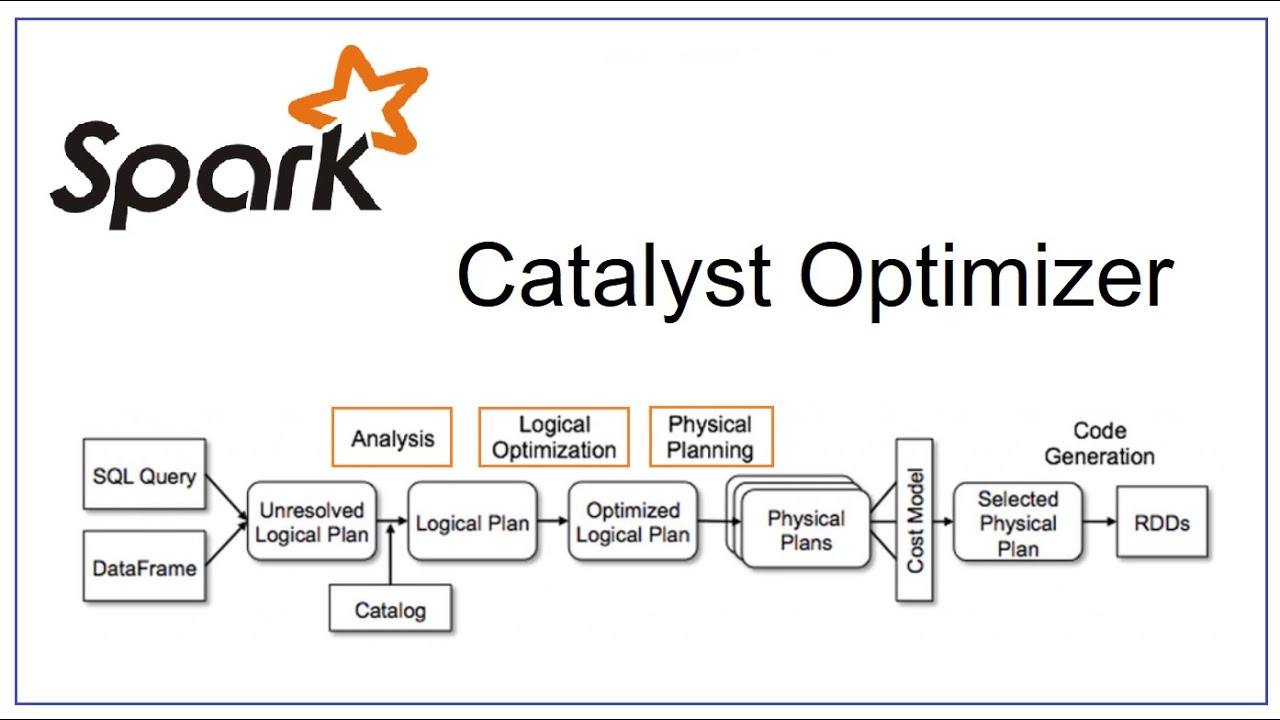

In the previous blog, I have successfully installed a standalone Ranger service. In this article, I show you how we can customize the logical plan phase of Spark Catalyst Optimizer in order to archive authorization in Spark SQL with Ranger.

Authorize Spark 3 SQL With Apache Ranger Part 1 - Ranger installation

Spark and Ranger are widely used by many enterprises because of their powerful features. Spark is an in-memory data processing framework and Ranger is a framework to enable, monitor and manage comprehensive data security across the Hadoop platform. Thus, Ranger can be used to do authorization for Spark SQL and this blog will walk you through the integration of those two frameworks. This is the first part of the series, where we install the Ranger framework on our machine, and additionally, Apache Solr for auditing.

Spark Catalyst Optimizer And Spark Session Extension

Spark catalyst optimizer is located at the core of Spark SQL with the purpose of optimizing structured queries expressed in SQL or through DataFrame/Dataset APIs, minimizing application running time and costs. When using Spark, often people see the catalyst optimizer as a black box, when we assume that it works mysteriously without really caring what happens inside it. In this article, I will go in depth of its logic, its components, and how the Spark session extension participates to change the Catalyst's plans.

MySQL series - Indexing

Indexing is a method to make queries faster, which is a very important part of improving performance. For large data tables, precise indexing will increase the query speed as a whole, however, this is often not taken into account in the table design process. This article talks about the types of indexes and how to properly index them.

MySQL series - Multiversion concurrency control

Usually storage engines do not use a simple row lock mechanism, to achieve good performance in a highly concurrent read and write environment, storage engines implement row locking with a certain complexity, the method is often used, is multiversion concurrency control (MVCC).

MySQL series - Transaction In MySQL

The next article in the MySQL series is about transactions. A very common operation in MySQL in particular and relational databases in general. Let's go to the article.

MySQL series - MySQL Architecture Overview

Hello everyone, recently, I did some research in MySQL because I think whoever doing data engineering should go in-depth with a certain relational database. Once you get a deep understanding of one RDBMS, you can easily learn the other RDBMS since they have many similarities. For the next few blogs, I will have a series about MySQL, and this is the first article.

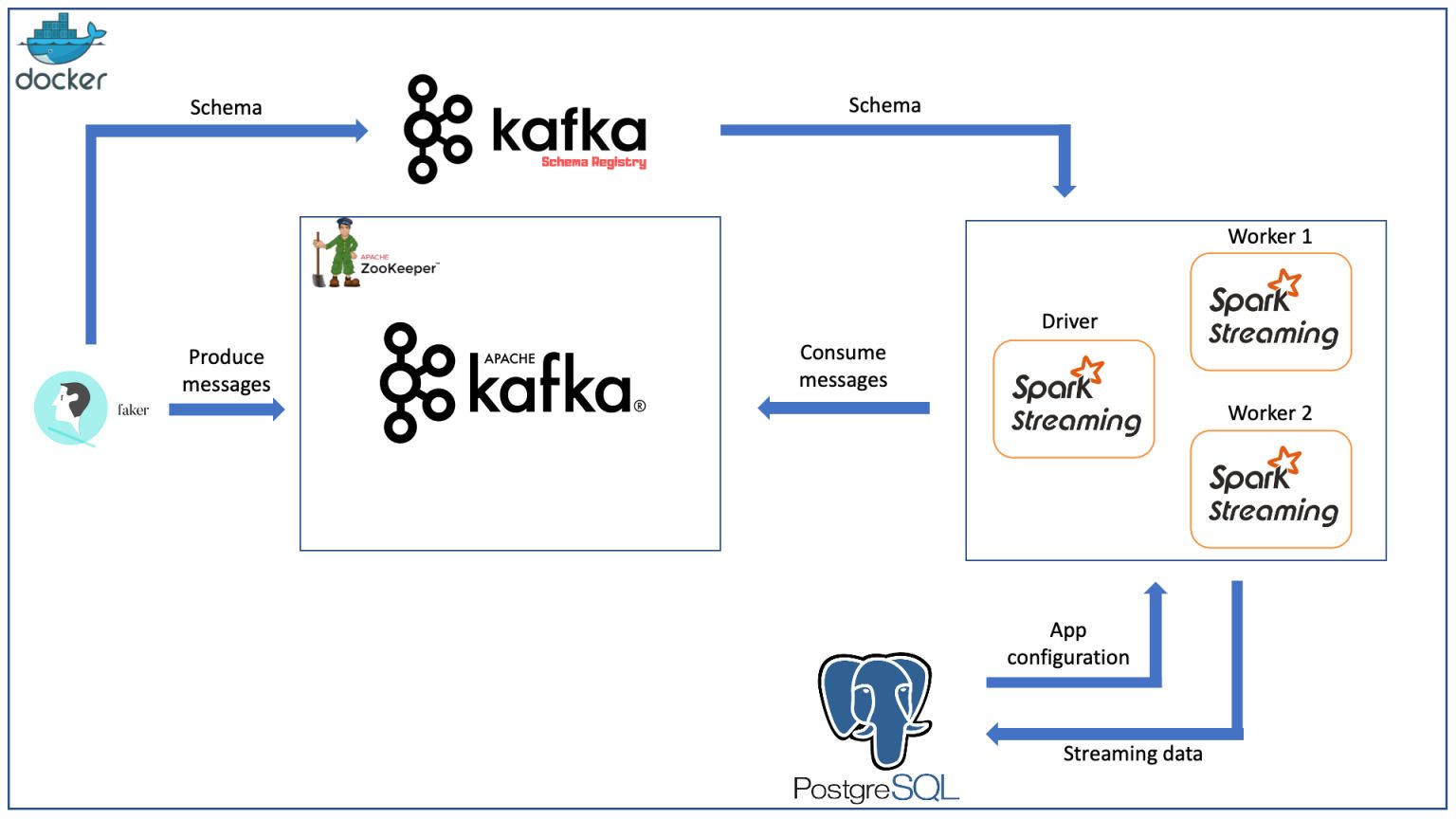

Create A Data Streaming Pipeline With Spark Streaming, Kafka And Docker

Hi guys, I'm back after a long time without writing anything. Today, I want to share about how to create a Spark Streaming pipeline that consumes data from Kafka, everything is built on Docker.